Facial recognition software has been banned in many cities across America. No problem for Technocrats at Veritone. Police departments are doing an end run around pesky laws and statutes by using Veritone’s non-biometric body identification software instead, all based on AI, of course. This is unprecedented because it gives police a brand new tool to use against citizens.

The CEO of Veritone is blatant about it:

“The whole vision behind Track in the first place,” says Veritone CEO Ryan Steelberg, was “if we’re not allowed to track people’s faces, how do we assist in trying to potentially identify criminals or malicious behavior or activity?”

Steelberg says, “We like to call this our Jason Bourne app.” He is expecting court challenges. ⁃ Patrick Wood, Editor.

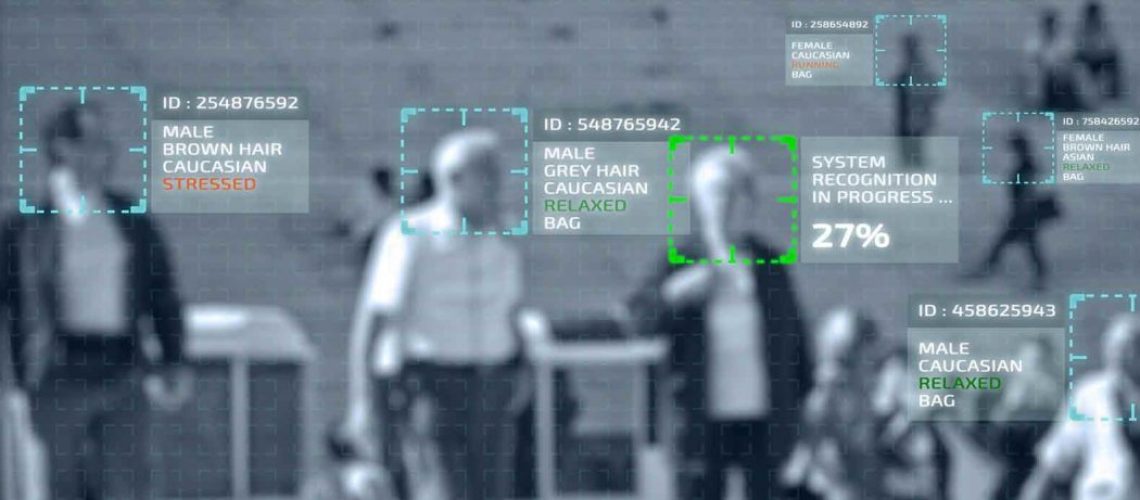

Police and federal agencies have found a controversial new way to skirt the growing patchwork of laws that curb how they use facial recognition: an AI model that can track people using attributes like body size, gender, hair color and style, clothing, and accessories.

The tool, called Track and built by the video analytics company Veritone, is used by 400 customers, including state and local police departments and universities all over the US. It is also expanding federally: US attorneys at the Department of Justice began using Track for criminal investigations last August. Veritone’s broader suite of AI tools, which includes bona fide facial recognition, is also used by the Department of Homeland Security—which houses immigration agencies—and the Department of Defense, according to the company.

“The whole vision behind Track in the first place,” says Veritone CEO Ryan Steelberg, was “if we’re not allowed to track people’s faces, how do we assist in trying to potentially identify criminals or malicious behavior or activity?” In addition to tracking individuals where facial recognition isn’t legally allowed, Steelberg says, it allows for tracking when faces are obscured or not visible.

The product has drawn criticism from the American Civil Liberties Union, which—after learning of the tool through MIT Technology Review—said it was the first instance they’d seen of a nonbiometric tracking system used at scale in the US. They warned that it raises many of the same privacy concerns as facial recognition but also introduces new ones at a time when the Trump administration is pushing federal agencies to ramp up monitoring of protesters, immigrants, and students.

Veritone gave us a demonstration of Track in which it analyzed people in footage from different environments, ranging from the January 6 riots to subway stations. You can use it to find people by specifying body size, gender, hair color and style, shoes, clothing, and various accessories. The tool can then assemble timelines, tracking a person across different locations and video feeds. It can be accessed through Amazon and Microsoft cloud platforms.

In an interview, Steelberg said that the number of attributes Track uses to identify people will continue to grow. When asked if Track differentiates on the basis of skin tone, a company spokesperson said it’s one of the attributes the algorithm uses to tell people apart but that the software does not currently allow users to search for people by skin color. Track currently operates only on recorded video, but Steelberg claims the company is less than a year from being able to run it on live video feeds.

Agencies using Track can add footage from police body cameras, drones, public videos on YouTube, or so-called citizen upload footage (from Ring cameras or cell phones, for example) in response to police requests.

Read More: Police Skirt Facial Recognition Bans With New Type Of AI#